Activating SCR and recovering e-mails from a dead Transport server database!

To skip all the blah-blah-blahs and jump directly to the procedure of Activating SCR click here and for Recovering e-mails from a transport server, click here.

Some rattlings

Well, just another day bad of my admin life! Enjoyed a very nice movie on Saturday crunching pop-corns with some friends (ofcourse missing a show because of always-late, but sweet friends :-P) and a very nice party later that day where I saw an e-mail from one of my colleague that happened to pop-up on my phone (hates ActiveSync at this moment) that give a very bad news of 3 Exchange 2010 servers in a DAG crashing down. I for some reason thought that this week ain’t going to be the usual “party-harder” week, but a “hard-working” week to earn some points for my Annual Appraisal due this year. Sunday wasn’t boring either as one of my over sweet friend took care of that issue, and I enjoyed my day test driving Hyosung GT650R and Hyosung ST7. Enjoying few movies in some Hollywood channels (Die Hard 4, Behind Enemy Lines – I know I’m going to be on fire on Monday. Movie marathon ended up with Hangover 2 at 4 am in the morning), I went to bed.

Woke up all of a sudden at 12.30 PM, got ready and rushed to office in that heavenly traffic of Bangalore with speed breakers and couple of fly-over construction sites on the way, turned red on hearing what happened over the weekend! What to do? Just another day!!

Scene 1

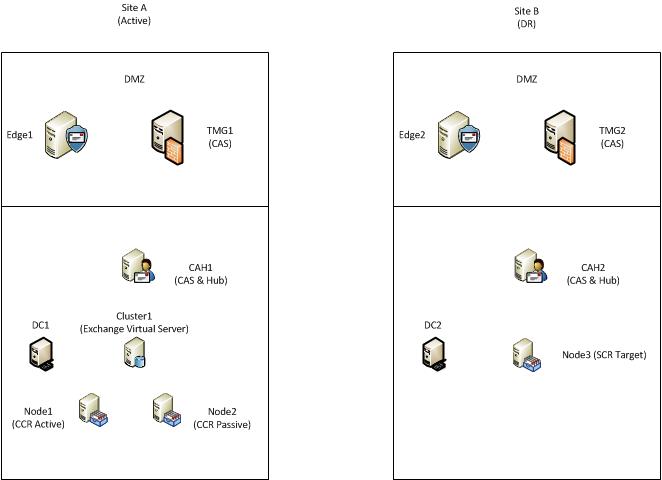

I was really out of sync with Exchange due to my recent new skillset that I was ‘forced’ to acquire on the Network and Security front, I was really waiting for the best opportunity to work on a real Exchange issue. I didn’t expect to be on that place working on that poorly designed infrastructure by someone else and I was least prepared – really been out of sync with Exchange for quite a long now. Well, this client had two sites. The below logical structure would explain the whole thing in a nut-shell – not really a Visio expert, but trying my best ;-).

When I landed in office, I saw one of my friend (who handles that specific client) digging deep into the powershell, ripping apart the event logs and banging against the services console with no avail. I kept watching him for 10-15 min till I managed to get my laptop on and enquired him of what happened. As if waiting for me to ask, he started whining like a school kid. 😛 He blamed the Windows team that have patched the Exchange servers for applying the hot fixes that are not in the original ‘approved list’ (remember ITIL?) and that ended up sending the “Transport Service” of the CAS & HUB server to coma. I started digging deep and to add to our frustrations, we discovered that the Windows team not only applied the unapproved Security Patches, but also took the responsibility of applying Exchange Roll Ups in an order that would make any Exchange admin to go high in temper! To make things even worse, we noticed not all the Exchange servers are patched equally with Exchange patches. More on that sometime later (At this time, the Exchange DAG issue that cropped up over the weekend had some off-shoots. I’ll explain that later)

Now, the Exchange server wouldn’t even bother about the e-mails lying in user’s Outbox as we later uncovered (or rather discovered) the design flaws. The Exchange server at the primary site seem to hate the C&H at the secondary site. Eventually like a true friend, the Edge server in primary site didn’t want to route the e-mails via his counterpart at the secondary site and saved about 1000s of e-mails in his own database. I’m on a mission to uncover this mystery but not sure how far I’d be successful as this client would be dissolved in few days. But if I do, I’ll post that later.

We began using our mathematical brains on permutations and combinations with installing/uninstalling/reinstalling patches on the Exchange servers. With the Business hammering us, we decided to run and hide behind the secondary site. No more blah-blahs but I’ll pen down exactly what we did to failover to the secondary site (I can hear someone shouting what’s so great about it – just a quick note for a dude/damsel in distress). Keep in mind that the commands below are executed for the Mailbox store called “MBX1” located under Storage Group “SG1”. For server names, refer the Topology above, but be sure to replace these variables with the one applicable in your environment – Your rights to use “Comments” section below is revoked in such cases! 😛 (just kidding…)

1. Ran the following command for each storage group.

Get-StorageGroupCopyStatus Cluster1SG1 -StandbyMachine Node3

This step was to ensure that the replication is intact. Glad that replication didn’t let us down.

2. Dismounted the databases manually using the command

Dismount-Database Cluster1SG1MBX1

for every mailbox store that is mounted. Alternatively, you can use the GUI Console to dismount the databases. (Skip this step if your primary Exchange server/site is down).

3. Now we logged into Node3 (the SCR target) and ran the following command

Restore-StorageGroupCopy -Identity Cluster1SG1 -StandbyMachine Node3

This prepares the SCR target for mounting. (Remember to add -Force switch to the command if your primary site is down/server inaccessible else this command will not work)

4. Then we disabled the Storage Group Copy. Remember, skipping this step will cause your RecoverCMS to fail. The following command was ran to accomplish this.

Disable-StorageGroupCopy -Identity Cluster1SG1 -StandbyMachine Node3 -Confirm:$False

5. Now, time to play around with DNS! Open the DNS Management Console (preferebly on the DC in Secondary site) and delete the DNS A record for EXCMS1. The reason is, the Exchange Virtual Server EXCMS would be pointed to the Cluster Virtual IP on the primary site and we wouldn’t want that to interfere with our Operation Failover. We just need to delete the A record, and Exchange will take care of the rest when you perform the next step!

6. Now run the following command in a command prompt (It’s okay – we too do use our Powershell windows as command prompt)

Setup.com /RecoverCMS /CMSName:Cluster1 /CMSIPAddress:<IPAddress>

The CMSIPAddress would be more likely an IP of one of the NICs on NodeC. I would prefer to use a Virtual IP mapped to one of the NIC interfaces in production VLAN but it’s your choice.

7. Next would be to mount the databases using Exchange Management Console. For all the old schools who prefer to use a Powershell, use the Mount-Database cmdlet.

Whoa!!! Now, we got the mailbox up and running and users were able to send/receive internally and send mails to internet. But incoming mails? We just then thought about the Edge server at the primary site who is receiving the e-mails (MX Record of Site A is configured with low cost). He still wouldn’t speak to his counterparts in Site B. At this point, we were in no mood to troubleshoot the issue as my engineers were already starving! I skipped my breakfast (ofcourse, I over slept after a movie) and it was already 6 pm. We decided we would stop the Transport service on the Edge1 effectively forcing the e-mail to be routed to the next MX record.

<Post Lunch>

By the time we reached back to our desk, we were happy to see e-mails from our managers appreciating us except for one mail, asking about the e-mails since Morning! Duh!! Let’s get back to work!!!

I just started digging deep about those databases (expenditure:30 min) and jumped up with the a plan. Here is what my plan said and what we did:

1. Stop the MS Exchange Transport service on the affected Edge server (we did it before we left for lunch).

2. We then opened the EdgeTransport.exe.config file located in the Exchange server’s Bin folder with a text editor (common friend – Notepad). We made a note of the values for the attributes “QueueDatabasePath” and “QueueDatabaseLoggingPath” which denotes the location of the Transport Database and it’s log files respectively. Luckily, we had both location point to the same path (somewhere in Q: drive).

3. Browsed to that location. That folder had files of following extensions (along with a Message Tracking folder, which we really didn’t bother about at this moment)

- Mail.que – Our vault (database) of messages in the queue and most valued

- Trn.chk – A checkpoint file

- Trn.log – Current log file

- Trntmp.log – The transaction log file that is created in advance to be used next

- Trnnnn.log – Older transaction log files

- Trnres00001 – Placeholder 1

- Trnres00002 – Placeholder 2

- Temp.edb – Queue database schema verifier

Doesn’t it resemble a database/log file structure? ESE – Yeah! Extensible Storage Engine. Just the EDB file is replaced by a QUE file for database. All the e-mails in the queue are no longer stored as individual items like in Exchange 2003, but in the form of ESE databases (I can hear someone saying “as if we don’t know that” – Sorry Gurus 😀 ).

We copied all these files to the other Edge server (let’s call him Target Edge server or Edge2). Alternatively, you can run the steps 4, 5, 6 and 7 on Edge1 itself before copying them to Edge2. But make sure to have this as a Copy.

4. Open a command prompt (or a Powershell) and switch to the directory where this copy of the queue database resides. We Ran the following command to perform a soft-recovery of the database.

eseutil /r Trn /d.

Here

/r – Recovery

/d. – indicates the database to recover is in the current directory (from where you’re running the CMD/PS)

Trn – Indicates the log files base name (don’t worry – usually transport databases have trn as their base)

Optionally, you can add /8 switch to the command that would facilitate faster recovery by setting a 8 kb database page especially when you have large number of transaction logs

5. We then ran a Defrag of the database using

eseutil /d mail.que

Hope this is self-explanatory

Now, this database is ready to takeover the place of current database on Edge2, but we need to prepare the other server for that. If you are running steps 4 & 5 on Edge1, better copy the defragged database to Edge2 now

6. First clear the e-mails currently in the queues off. For this, we would need to Pause the MS Exchange Transport service which will prevent the server from accepting any new mails. Then open the queue viewer and wait for the active queues to be “Zero”. Usually, it is the Unreachable and Poison queues that shows values, else time to check the mail flow mate! 🙂 Run the following Powershell commands to re-submit the messages in Unreachable queue and then export any messages that are really unreachable. We were lucky as we didn’t have any mails in the queues – Ladder to Step 9.

To Resubmit

Retry-Queue -Identity “Unreachable” -Resubmit $True

Wait for few minutes giving sometime for the messages to be retried. To export any other messages remaining here in .eml format, run

Get-Message -Queue “Unreachable” | Export-Message -Path “a folder”

7. Now, time to check out the Poison queues. I wouldn’t really be bothered about these, but just in case you want to attempt to deliver them, you can try “Resume” using the Queue viewer. Any messages here can be exported using the second cmdlet in Step 6, replacing “Unreachable” with “Poison”. Remember to specify a different folder, unless you have any specific reason.

8. Follow this step only if the database from the source is quite old and you have a feeling that the e-mails might be bounced due to the message expiration time in target Edge.

Run the following cmdlet to increase the message expiration time.

Set-TransportServer -MessageExpirationTimeout <Time Out Interval In dd.hh:mm:ss Format>

9. We then examined the EdgeTransport.exe.config and found out the location of Database and transaction log files location as we did in step 2

10. Now, the time to really replace the database. We stopped the MS Exchange Transport Service on the Target Edge server (Edge2 in our case) and replaced the log files and database of the current queues with the one copied from Edge1 and started the MS Exchange Transport Service.

Whew!!! All the 10000 e-mails who thought they would never be delivered, started reaching the user’s mailbox and within few minutes, the queue is flushed! 🙂 If the queue size is comparatively large, then you might want to consider pausing the MS Exchange Transport service till the messages currently in the queue are delivered.

Scene 2

Well at this point, it was already time to leave office and when I was about to start packing my bag, my manager came up and said “Muthu, the Lagged Copy Exchange server that had issues on Saturday doesn’t seem to be really communicating to the other Exchange servers in DAG. You better check that before leaving as you know what this client is capable of!!!”. Just then I remembered one of the member of my team stated that they were facing difficulties in booting up this server. Though they successfully managed to turn on this server, the cluster is not really available. I didn’t really have a choice now and I’ll explain that story a bit later! 😉

I will also post how we failed back the services from SCR in DR site back to the live production environment, a bit later!

Muthu

Team@MSExchangeGuru.com

December 20th, 2011 at 12:08 pm

Muthu,

Great Article !!! keep the good work … looking forward to the next one frm you

December 22nd, 2011 at 12:31 am

Very well written and explained

December 22nd, 2011 at 8:25 am

Thanks both! Such comments from our beloved readers/visitors/subscribers always keep encouraging for more! For now, BRB in few days with two other articles that I promised. 🙂

February 15th, 2012 at 6:56 am

Great Article and well explained!!! I am a FAN of you guys…

September 20th, 2012 at 9:55 am

Very good and well explained one.

March 8th, 2015 at 1:58 pm

It sounded like a good story & i tried my best to understand . Thanks for adding the comic views but really it was some good troubleshooting & knowledge that you share .